Introduction

Firstly, what is headless chrome? Well it's very similar to the google chrome browser people use to browse the internet. The only difference is rather than being controlled with a keyboard and mouse, it's controlled by software. The software can do everything a user would by issuing commands. Rather than typing into the address bar it can issue the command goto("google.com") to visit a site or button.click() to click on a button rather than moving the mouse and clicking.

Headless chrome can also take screenshots of the page it has loaded, which is why I came to use it. I needed to build a pool of over 1,000 software workers, each with their own headless chrome window. Each of these workers would be given the address of a webpage so they could open the page, take a screenshot then return that image to whoever needed it.

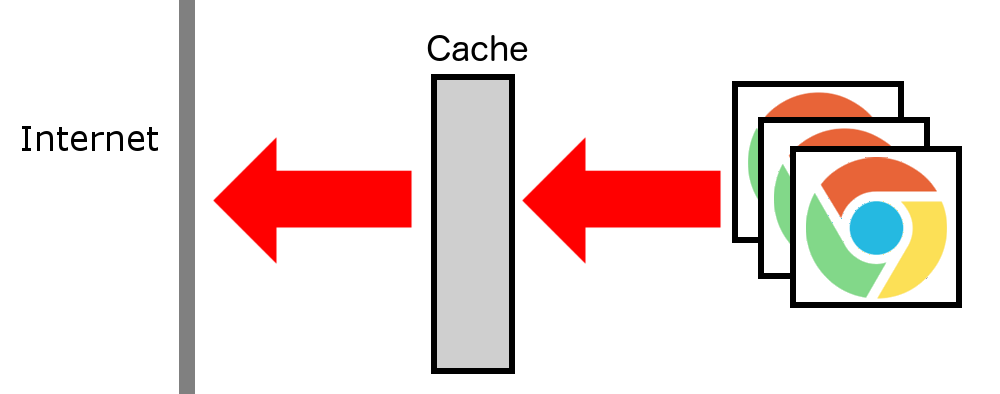

Loading webpages, images and stylesheets from the internet is slow and often different workers would be requesting the same assets again and again. Things don't have to be like this though! Things can be improved by adding a cache proxy between the workers and the wider internet. Then once one worker makes a request every other worker can be served from the cache without having to go out of the local network, saving bandwidth and improving performance.

Headless chrome supports this, just tell it on startup to send it's requests via the cache proxy and everything just works.

chrome.launch({proxy: PROXY_URL}).then(async browser => {

const page = await browser.newPage();

// Ready for requests

});

The end? I'm afraid not...

The problem

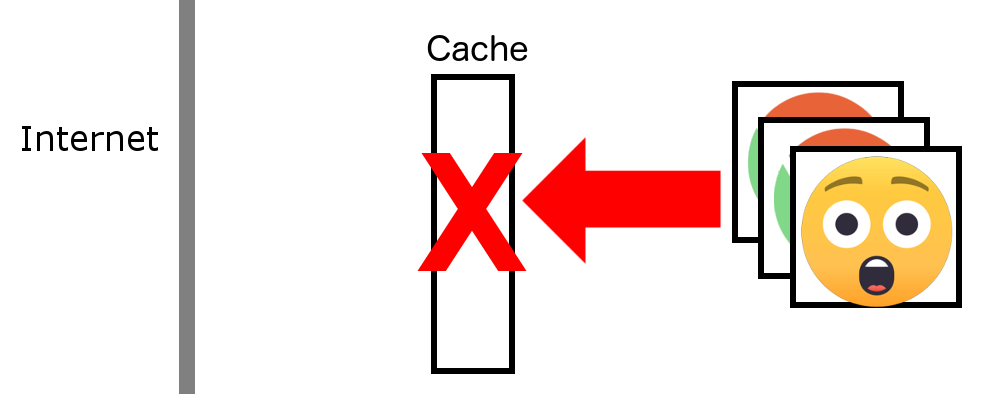

The above solution works... until it doesn't. We have introduced a single point of failure, meaning if the proxy breaks then every worker will stop working at once. This is bad, something needs to be changed.

One solution might be to design the proxy not to fail, but it costs big $$$ to build and manage something stable, with downtime free updates, hot backups in multiple regions etr. That is fine for the Googles and Facebooks of the world, but not everyone has the luxury.

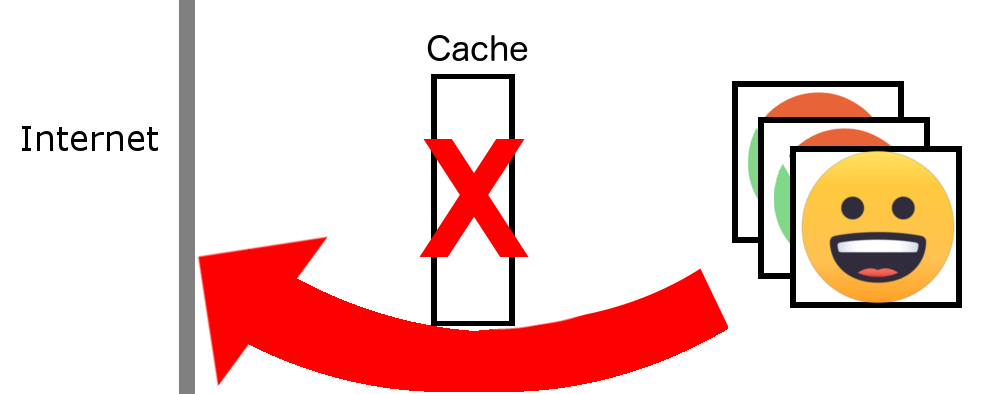

Instead we need to accept the proxy could disappear at any moment and be able to deal with it by immediately re-routing requests around the proxy and directly out to the internet. It might make the workers 100ms slower after a failure but nothing catastrophic would happen.

Great in theory, but headless chrome doesn't support that. Chrome needs to be told what proxy to use on startup & it can't be changed after. Meaning to disable the proxy we will need to restart every browser in every worker at once, a task that could easily take 30 seconds or more.

All is not lost

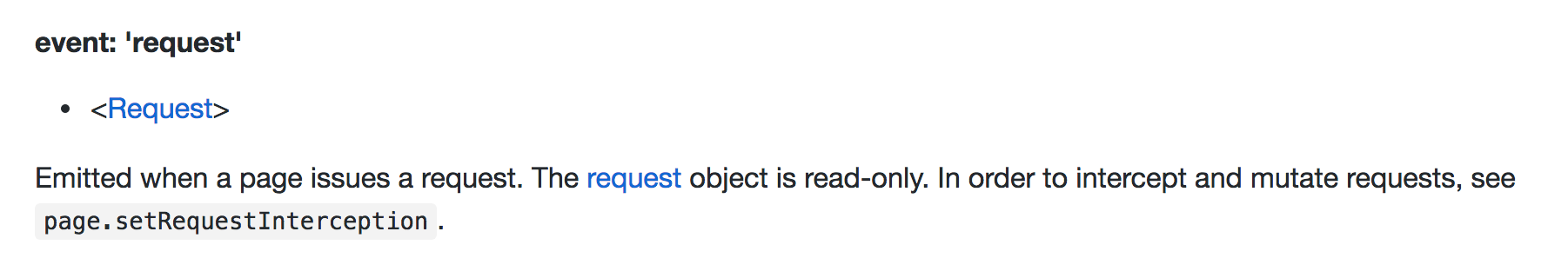

Headless Chrome doesn't support changing the proxy without a restart so I dug deep into the docs to find what it could do. From that I discovered the command setRequestInterception(). This command allows us to intercept every network request the browser makes and either allow it or block it, for example you could allow the page to load but block any images and videos to improve performance.

If we can intercept every network call we can then stop the request from accessing the internet directly, and instead do the call ourselves then pass the result back. If we are the one making the call (not the browser) we can send it where ever we want and crucially decide whether to send it via the cache on the fly.

chrome.launch().then(async browser => {

const page = await browser.newPage();

await page.setRequestInterception(true);

page.on('request', interceptedRequest => {

get(request.url(), function (error, response, body) {

return interceptedRequest.respond({

status: response.statusCode,

contentType: response.headers['content-type'],

body: body

});

});

});

// Ready for requests

});

Now if the proxy breaks we can instantly start sending requests around the fault. Amazingly this works flawlessly I'll call that a success :)