Introduction

Almost every website on the internet today uses some form of analytics platform. These analytics allow site owners to measure how many people are using their site, what they are doing and who they are. This data allows sites to ensure their marketing it working, the home page is leading to conversions and their visitors browsers are supported.

The first place everyone turns to is Google Analytics. It's free, easy to use and powerful, but it also comes with some problematic privacy concerns for visitors to your site. Adding the google analytics tag to your site allows Google to track your users across websites, building a impressively complete picture of that person, who they are and what they do. With GDPR recently taking effect it also introduces legal issues for site owners who need to unsre they have permission to use all this data they (and Google) collect.

In reality Google Analytics collects a lot more data than is really required most of the time and I certainly didn't want my tiny site to collide head on with GDPR. So instead I set out to build an analytics platform that met my needs while avoiding most of the issues with most 3rd party systems. By only collecting the data needed we can avoid having to worry about any data protection legislation and by using only 1st party cookies users don't need to worry about their behaviour being tracked across the web as a whole.

The plan

The first thing to decide is what metrics we are interested in and what data needs to be collected to calculate those metrics, by deciding at the beginning we can avoid collecting data that isn't even needed.

I decided on the following:

- Total number of page views over time

- Page popularity - How many views each page gets

- Page scroll distance - How many people actually read the whole page?

- Time on page

- Bounce rate

- How many visitors are new vs returning

- Browser & screen resolutions

- Referrers and utm_source querystrings

None of these metrics require tracking the user between sessions! This means we don't even need a permanent cookie id on the users device. The only exception is recording which visitors are new vs returning, but that can be stored as a simple "has visited before" counter, nothing that could allow the user to be identified.

Designing the system

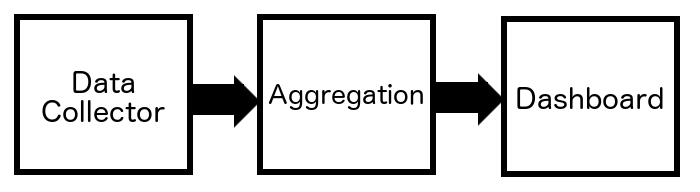

With the requirements scoped out it's time to plan the system. I ended up settling on a three stage process. Each stage is backed by AWS lambda with the data stored in an AWS managed postgres instance which means there are no servers to manage. This is important as we are competing with Google Analytics which is incredibly easy and reliable.

Stage 1: Data collector

The first stage is some JavaScript that is placed on every page of the site. This code will once the page has finished loading send data to an api about what page the visitor is on and what they are doing.

Each request will include a session cookie id, this id will allow all the events from that session to be joined and analysed together. But after 10 minutes of no activity the cookie will automatically be deleted by the browser, ensuring that once the session ends, the data stored on the server will no longer able to be linked to the actual device that generated them.

There are two types of event generated on this site. The first is a "PAGE_VIEW" event. This is sent whenever a new page is opened by the user. It also contains detailed information about the device itself including the screen resolution, platform and browser.

send(PAGE_VIEW, {

path: location.pathname,

qs: location.search,

session_time: getSessionTime(),

browserCodeName: navigator.appCodeName,

browserName: navigator.appName,

platform: navigator.platform,

screenHei: window.screen.height,

screenWid: window.screen.width,

nv: n_visits

});The second type is a "PING" event. This is sent periodically while the user is interacting with a page. This includes information about how long the user has been on the page and how far down they have scrolled.

send(PING, {

page_time: getPageTime(),

session_time: getSessionTime(),

scroll_distance: getScrollDistance(),

path: location.pathname,

n: ++pingsSent

});These two are just what was required for this site. A retailer might want also to record information about the purchases made. Or a web app might want to record when someone creates an account. By building your own custom analytics platform it allows the actual data to be collected and no more.

Stage 2: Aggregation

The first stage could potentially create a lot of data, more data that we really need. This data is in reality a liability, you have to worry about how to keep it safe and make sure having more data to search through doesn't impact performance.

For long term data there is only 2 things that i'm really interested in. That is the number of sessions and page views over the long term. The aggregation step runs once per day and generates some general analytics for the day before. This data can be kept long term for future analysis but the detailed event data can then be deleted by the aggregator once it is no longer needed-which in this case is after 14 days.

Stage 3: The dashboard

Now we have all the data we need stored in a databased ready to be analysed and presented in an analytics dashboard. By building a custom built system I was able to ensure that all the data required was collected and no more, protecting the privacy of visitors and avoiding any legal issues with the collection of this data.

For how this data was analysed and presented, move on to part 2.